An adventure in SIMD

February 15, 2020

There’s an area in the Rust standard library that can unlock a set of performance improvements I often found was at the core of some of the fastest libraries available in the language. I started my adventure in SIMD to building an optimised function using these tools, understand if it was worth the investment and ultimately to have fun challenging myself.

My goal was simple

I wanted to iterate over an array and find the index of the smallest or largest item. In most numerical libraries

functions would be called argmin and argmax respectively. Rust, like Python, doesn’t have these as part of the

standard library and at the time I started this project I couldn’t see any packages on

crates.io that provided the implementation for Rust’s array or Vec types. I

think this is partly due to several factors, one of them being…

It’s extremely trivial to implement from scratch.

fn argmin<T: PartialOrd + Copy>(arr: &[T]) -> usize {

let mut index: usize = 0;

let mut low = arr[0];

for (i, item) in arr.iter().enumerate() {

if item < &low {

low = *item;

index = i;

}

}

index

}Rust jargon aside, we’re iterating over all items in the array, comparing the last know low to the current item, and updating the index if necessary.

I later came to find, when dealing with f32’s things started to get tricky, as the sort order couldn’t be assumed for

NaN’s, or even computed in my case.

The general problem

I needed to carry out an exhaustive search since our low could be anywhere in the array. Unfortunately, there are no early bailouts here, I needed to find a method of processing the data faster. Which led me to the development of the argmm crate.

This post is a high-level summary of the implementation. I recommend checking out my GitHub repo linked above if you’re interested in how it all came together.

So what is SIMD?

SIMD is an acronym for Single instruction multiple data, a fancy way of saying we can do lots of math with a single CPU instruction. If you’re using a modern Intel CPU, then you’ll likely have this available as standard.

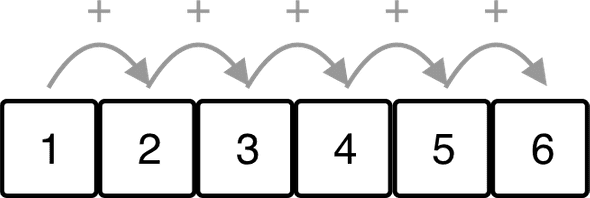

As an example, imagine we have to find the sum of all elements in an array. This would require us to iterate over the entire collection and keep a running total along the way.

SIMD improves on this by carrying out the addition on multiple data points at once, provided that they fit into a vector.

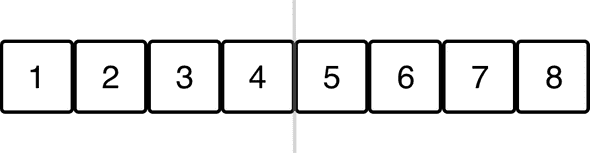

A vector being similar to a fixed size array. In the above example, if we were using the i32 type and a 128-bit SIMD

vector, we could fit at most 4 items into the vector.

That alone would not be of any benefit to us since we can’t simply add a scalar value to a SIMD vector. We need another SIMD vector of the same size to benefit from the operations.

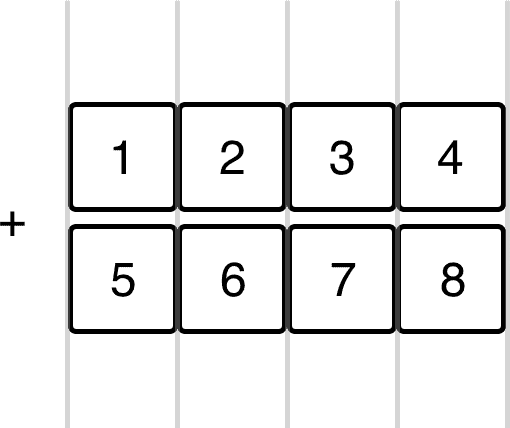

If we were to continue with the addition example, we have the first 8 elements in our sum. We stack the vectors on top of each other and add each of the corresponding columns together in a single CPU instruction.

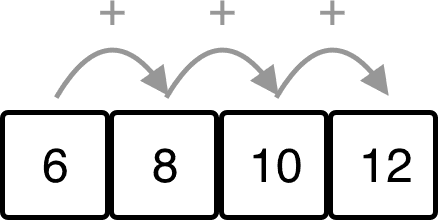

You’re left with a new SIMD vector with your running total for each column but this does bring me onto another key point

about SIMD instructions. They’re made for efficient vertical operations and not horizontal ones like below.

This means if you are carrying out a summation, you still need to sum the column totals to get your answer. This may feel like a design flaw but if you look at the number of CPU instructions you’ve saved, you can start to see how exhaustive searches could benefit from the operation.

Believe it or not, the Rust compiler tries to find situations where it can automatically do this for you, it’s known as automatic vectorization, but it’s not always clear to the compiler where it can be done.

When things don’t quite fit

In most real-world situations, you don’t have control over the size of the array, for example, you may end up with an array that falls short of the vectors minimum size or one that has too many elements. In either case, you wouldn’t be able to carry out the SIMD instructions on the entire array.

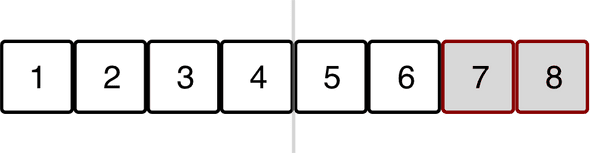

After putting some thought to the problem, by sticking with a 128-bit vector, using 32-bit types, we only ever have 2

scenarios where we would not be able to fully utilise SIMD.

Arrays less than 8 elements long

In this situation, we wouldn’t be able to carry out a SIMD instruction because elements 7 and 8 are missing. We have a choice of padding our array to the desired size or computing our total by summing each element as we would normally. From a performance perspective, this would be our worst case.

Arrays with a length not divisible by 4

Here we can process the first 8 elements with SIMD but have 9 and 10 which don’t fit into a vector. We could again pad the array or compute the sum of the extra elements as normal. Worst case here, 3 elements won’t fit into a vector.

The trade-off

In the end, I decided to go with processing elements normally when they didn’t fit into a SIMD vector. This would penalise performance on smaller arrays but as the array size increased, the performance hit would become negligible.

One of the reasons I chose not to pad the arrays was down to the set of SIMD operations I was using. Instead of summing values in the vectors, I needed to compare them to each other.

Padding the array with zeros and try to find the min or max value would break the comparison. Zero could well be the min or max, making it difficult to know the true low or high.

Keeping track of the index was also a challenge, it involved a separate SIMD array and some masking and swapping.

Benchmarks

The f32 SIMD implementation on average saw a 220% improvement compared to the trivial implementation. The i32

implementation, on the other hand, was a bit slower with a 70% improvement.

Besides some SIMD operations not performing particularly well and alternative solution being found. I saw the biggest gain from my choice of loop. This is something I was partly aware of but I didn’t realise the extent of the performance gains.

Your choice of loop matters

for i in (0..sim_arr.len()).step_by(4) { ... }Originally I decided to iterate over a range, stepping by 4 elements and indexing into my array. Since I was dealing with a SIMD vector, I knew the array was divisible by 4, so this would give me equally sized pieces in each iteration of the loop.

Running some profiling with Valgrind I could see that the loop was potentially the biggest bottleneck in the code. I

could vaguely remember a blog post about for loops in Rust and how they could be inefficient at times, so I switched

over to a functional style loop.

sim_arr.iter().step_by(4).for_each(|step| { ... }Making this change saw on average a 26% improvement for f32 and 30% for i32. From what I understand, using this type

of iteration helps the compiler enable optimisations. I couldn’t believe the improvement in speed so I started to pay

attention to the different types of iterator methods that were available.

sim_arr.chunks(4).for_each(|step| { ... }After poking around the std library docs I managed to find the chunks method. This made sense, as I was looking for a block of 4 elements and this way I was being returned a section of the array rather than an index into it.

This change saw a further 50% improvement for f32. At the time I had dropped the i32 implementation as I was focused

on the f32 implementation across the line.

As a side note, i32 and f32 use a different sets of SIMD instructions.

sim_arr.chunks_exact(4).for_each(|step| { ... }Consulting the documentation once again, I noticed there was another chunks method that didn’t carry out an internal check on the remaining elements as it could potentially return a chunk less than the desired size.

Thankfully chunks_exact returned an array of the requested size every time and making this final change saw an

approximate 20% gain. I decided to add i32 support again, although not as impressive as the f32 it was still an

improvement.

Conclusion

If anything I learned the value of benchmarking and profiling. SIMD can provide enormous performance gains but not without structuring your code in a way that the compiler can benefit from. The loop issue had me scratching my head for weeks because I was distracted by the choice of SIMD instruction I was using and there potential performance gains.

The lines of code and development time are also a huge consideration when dealing with SIMD. Unless you have to, chasing

performance gains can be extremely time consuming and full of pitfalls. For my use case, I had an algorithm that

continually needed to find the minimum and maximum value in large arrays as part of the main loop, so a performance gain

in the argmin and argmax functions were of extreme benefit to me.

I can see myself venturing into the world of SIMD again but I would be cautious about investing my time unless it’s warranted.